Feature Selection with Python

““Natural selection eliminates and maybe maintains, but it doesn’t create.””

Goals:

Discuss feature selection methods available in Sci-Kit (sklearn.feature_selection), including cross-validated Recursive Feature Elimination (RFECV) and Univariate Feature Selection (SelectBest);

Discuss methods that can inherently be used to select regressors, such as Lasso and Decision Trees - Embedded Models (SelectFromModel);

Demonstrate forward and backward feature selection methods using statsmodels.api; and

Correlation coefficients as feature selection tool

Overview:

In real world analytics, we often come across a large volume of candidate regressors, but most end up not being useful in regression modeling. Finding the most appropriate set of regressors is a variable selection issue. Unfortunately, variable selection has two conflicting goals: (a) on the one hand, we try to include as many regressors as possible so that we can maximize the explanatory power of our model, (b) on the other hand, we want as few predictors as possible because more regressors could lead to an increased variance in the prediction.

When starting out with a very large feature set, deleting some of them, often results in a model with better precision. This is not surprising because when we retain variables with zero coefficients or coefficients with values less than their standard errors, the parameter estimates and the predicted response increase unreasonably. However, deleting variables could also increase bias into estimates of the coefficients and the response. Fortunately, we can find a point where the deletion of variables has a small impact, and the error (MSE) associated with parameter estimates will be smaller than the reduction in variance.

Metrics to use when evaluating what to keep or discard:

When evaluating which variable to keep or discard, we need some evaluation criteria. Many people decide on R squared, but other metrics may be better because R squared will always increase with the addition of newer regressors. Adjusted R squared is a metric that does not necessarily increase with the addition of variables. It only increases if the partial F statistic used to test the significance of additional regressors is greater than 1. Other metrics may also be used such as Residual Mean Square, Mallow’s Cp statistic, AIC and BIC, metrics that evaluate model error on the training dataset in machine learning.

Methods to evaluate what to keep or discard:

Several strategies are available when selecting features for model fitting. Traditionally, most programs such as R and SAS offer easy access to forward, backward and stepwise regressor selection. With a little work, these steps are available in Python as well. I have seen people try filtering methods, where they assess each regressor’s correlation with the dependent variable or check univariate tests that evaluate the relationship between each independent regressor and the dependent variable. Filtering is usually based on an arbitrary (or normative) threshold that allows the analyst to discard features. The third group of potential feature reduction methods are actual methods, that are designed to remove features without predictive value. A shrinkage method, Lasso Regression (L1 penalty) comes to mind immediately, but Partial Least Squares (supervised approach) and Principal Components Regression (unsupervised approach) may also be useful. Lastly, tree based methods produce a variable importance output, which may also be extremely useful when deciding what to keep and what to eliminate.

Stepwise Feature Elimination:

There are three ways to deploy stepwise feature elimination: (a) forward, (b) backward, and (c) stepwise methods.

Forward:

Forward elimination starts with no features, and the insertion of features into the regression model one-by-one. First, the regressor with the highest correlation is selected for inclusion, which coincidentally the regressor that produces the largest F-statistic value when testing the significance of the model. This is called partial correlation because technically they represent the correlation coefficients between the model residuals with a specific variable and the model residuals with the other regressors. All subsequent regressors are selected the same way. The procedure continues until the F statistic exceeds a pre-selected F-value (called F-to-enter) and terminates otherwise.

Backward:

Backward elimination starts with all regressors in the model. The F statistic is calculated as we remove regressors on at a time. In this case, the feature with the smallest F statistic is removed from the model ands the procedure continues until the smallest partial F statistic is greater than the pre-selected cutoff value of F, and terminates otherwise. This method sounds particularly appealing, when we’d like to see how each variable affects the model.

Stepwise:

Stepwise elimination is a hybrid of forward and backward elimination and starts similarly to the forward elimination method, e.g. with no regressors. Features are then selected as described in forward feature selection, but after each step, regressors are checked for elimination as per backward elimination. The hope is that as we enter new variables that are better at explaining the dependent variable, variables already included may become redundant.

Interestingly, stepwise feature selection methods were not readily available in Python until 2019, and one had to create a custom program. Today, the method can be found on github (https://github.com/AakkashVijayakumar/stepwise-regression). I wanted to demonstrate how it works with the Boston housing data.

import pandas as pd import statsmodels.api as sm def forward_regression(X, y, initial_list=[], threshold_in=0.01, threshold_out = 0.05, verbose=True): initial_list = [] included = list(initial_list) while True: changed=False # forward step excluded = list(set(X.columns)-set(included)) new_pval = pd.Series(index=excluded) for new_column in excluded: model = sm.OLS(y, sm.add_constant(pd.DataFrame(X[included+[new_column]]))).fit() new_pval[new_column] = model.pvalues[new_column] best_pval = new_pval.min() if best_pval < threshold_in: best_feature = new_pval.argmin() included.append(best_feature) changed=True if verbose: print('Add with p-value '.format(best_feature, best_pval)) if not changed: break return included forward_regression(X_train, Y_train)

The results of forward feature selection are provided below. Note that the threshold was selected at 0.01 meaning that only variables lower than that threshold were selected. In this case 11 of 13 features. A more stringent criteria will eliminate more variables, although the 0.01 cutoff is already pretty stringent.

Add LSTAT with p-value 3.76374e-72 Add RM with p-value 2.28863e-18 Add PTRATIO with p-value 2.99591e-12 Add DIS with p-value 4.02689e-05 Add B with p-value 5.63547e-05 Add NOX with p-value 0.0010313 Add CHAS with p-value 0.00226873 Add RAD with p-value 0.00457061 Add CRIM with p-value 0.00106018 Add TAX with p-value 0.00869266 Add ZN with p-value 0.00162973 ['LSTAT', 'RM', 'PTRATIO', 'DIS', 'B', 'NOX', 'CHAS', 'RAD', 'CRIM', 'TAX', 'ZN']

Now we can assess the backward elimination procedure. It appears that this method also selected the same variables and eliminated INDUS and AGE.

<p>Helldef backward_regression(X, y, initial_list=[], threshold_in=0.01, threshold_out = 0.05, verbose=True): included=list(X.columns) while True: changed=False model = sm.OLS(y, sm.add_constant(pd.DataFrame(X[included]))).fit() # use all coefs except intercept pvalues = model.pvalues.iloc[1:] worst_pval = pvalues.max() # null if pvalues is empty if worst_pval > threshold_out: changed=True worst_feature = pvalues.idxmax() included.remove(worst_feature) if verbose: print('Drop with p-value '.format(worst_feature, worst_pval)) if not changed: break return included backward_regression(X_train, Y_train)o, World!</p>

Drop INDUS with p-value 0.987936 Drop AGE with p-value 0.938842 ['CRIM', 'ZN', 'CHAS', 'NOX', 'RM', 'DIS', 'RAD', 'TAX', 'PTRATIO', 'B', 'LSTAT']

Feature Selection with Sci-Kit:

Several methodologies of feature selection are available in Sci-Kit in the sklearn.feature_selection module. They include Recursive Feature Elimination (RFE) and Univariate Feature Selection. Feature selection using SelectFromModel allows the analyst to make use of L1-based feature selection (e.g. Lasso) and tree-based feature selection.

Recursive Feature Elimination:

A popular feature selection method within sklearn is the Recursive Feature Elimination. RFE selects features by considering a smaller and smaller set of regressors. The starting point is the original set of regressors. Less important regressors are recursively pruned from the initial set. The procedure is repeated until a desired set of features remain. That number can either be a priori specified, or can be found using cross validation. In fact, RFE offers a variant – RFECV – designed to optimally find the best subset of regressors.

#RECURSIVE FEATURE ELIMINATION #Feature ranking with recursive feature elimination and cross-validated selection of the best number of features from sklearn.feature_selection import RFE from sklearn.feature_selection import RFECV X = X_train y = Y_train names=pd.DataFrame(X_train.columns) #use linear regression as the model lin_reg = LinearRegression() #This is to select 5 variables: can be changed and checked in model for accuracy rfe_mod = RFE(lin_reg, 5, step=1) #RFECV(lin_reg, step=1, cv=5) myvalues=rfe_mod.fit(X,y) #to fit myvalues.support_#The mask of selected features. myvalues.ranking_ #The feature ranking, such that ranking_[i] corresponds to the ranking position of the i-th feature. Selected (i.e., estimated best) features are assigned rank 1. rankings=pd.DataFrame(myvalues.ranking_) #Make it into data frame rankings

| 0 | |

|---|---|

| 0 | 4 |

| 1 | 5 |

| 2 | 9 |

| 3 | 1 |

| 4 | 1 |

| 5 | 1 |

| 6 | 8 |

| 7 | 1 |

| 8 | 3 |

| 9 | 6 |

| 10 | 1 |

| 11 | 7 |

| 12 | 2 |

Variables in the 4-6, 8 and 11 position ( a total of 5 variables) were selected for inclusion in a model. This is because we specified 5 variables as the preferred number of features. This could be increased or decreased as needed. At this point, the feature names are not printed, only their position.

#Concat and name columns ranked=pd.concat([names,rankings], axis=1) ranked.columns = ["Feature", "Rank"] ranked #Select most important (Only 1's) most_important = ranked.loc[ranked['Rank'] ==1] print(most_important) most_important['Rank'].count()

Feature Rank 3 CHAS 1 4 NOX 1 5 RM 1 7 DIS 1 10 PTRATIO 1 5

The code prints the variables ranked highest above the threshold specified. Their rank is concatenated with the name of the feature for easier interpretation. The five feature threshold was specified, which may or may not be the right choice. In order to identify the most optimal features, we can use cross validation. Luckily, this is available in Sci-kit as an option. I deliberately changed the cv value to 300 fold to produce a different result. The default is 3, which results in all features selected in the Boston housing dataset. As we increase the folds, the task becomes computationally more and more expensive, but the number of variables selected reduces. In this example, the only feature selected is NOX.

#use linear regression as the model lin_reg = LinearRegression() #This is to select 8 variables: can be changed and checked in model for accuracy rfe_mod = RFECV(lin_reg, step=1, cv=300) #RFE(lin_reg, 4, step=1) myvalues=rfe_mod.fit(X,y) #to fit

Univariate Feature Selection:

UFS selects features based on univariate statistical tests, which evaluate the relationship between two randomly selected variables. In case of a continuous dependent variable, two options are available: f-regression and mutual_info_regression. Several options are available but two different ways of specifying the removal of features are (a) SelectKBest removes of all low scoring features, and (b) SelectPercentile allows the analyst to specify a scoring percent of features, and all features not reaching that threshold then are removed.

#UNIVARIATE SELECTION # Feature Extraction with Univariate Statistical Tests (f_regression) import pandas import numpy from sklearn.feature_selection import SelectKBest from sklearn.feature_selection import f_regression from sklearn.datasets import load_digits # load data X = X_train y = Y_train names=pd.DataFrame(X_train.columns) model = SelectKBest(score_func=f_regression, k=4) results = model.fit(X, y) print (results.scores_) #print (results.pvalues_) results_df=pd.DataFrame(results.scores_) #Concat and name columns scored=pd.concat([names,results_df], axis=1) scored.columns = ["Feature", "Score"] scored.sort_values(by=['Score']).head(13)

| Feature | Score | |

|---|---|---|

| 3 | CHAS | 10.998381 |

| 7 | DIS | 27.503982 |

| 11 | B | 45.463610 |

| 8 | RAD | 67.230129 |

| 6 | AGE | 67.315184 |

| 1 | ZN | 70.344372 |

| 0 | CRIM | 71.670898 |

| 4 | NOX | 89.612081 |

| 9 | TAX | 101.446889 |

| 2 | INDUS | 117.522270 |

| 10 | PTRATIO | 141.487726 |

| 5 | RM | 308.360027 |

| 12 | LSTAT | 495.926709 |

We can now rank the importance of each feature based on their score. The higher the score, the more important the variable.

A very interesting discussion on StackExchange suggests that the ranks obtained by Univariate Feature Selection using f_regression can also be achieved by computing correlation coefficients of individual features with the dependent variable. One must compute the correlation at each step. See: https://stats.stackexchange.com/questions/204141/difference-between-selecting-features-based-on-f-regression-and-based-on-r2

However, when examining correlation coefficients of each independent variable and the dependent variable at the same step, the ranks are NOT the same. Still, some analysts find the below analysis useful in deciding on which feature to use. After computing the correlation of each individual regressor and the dependent variable, a threshold will help deciding on whether to keep or discard regressors.

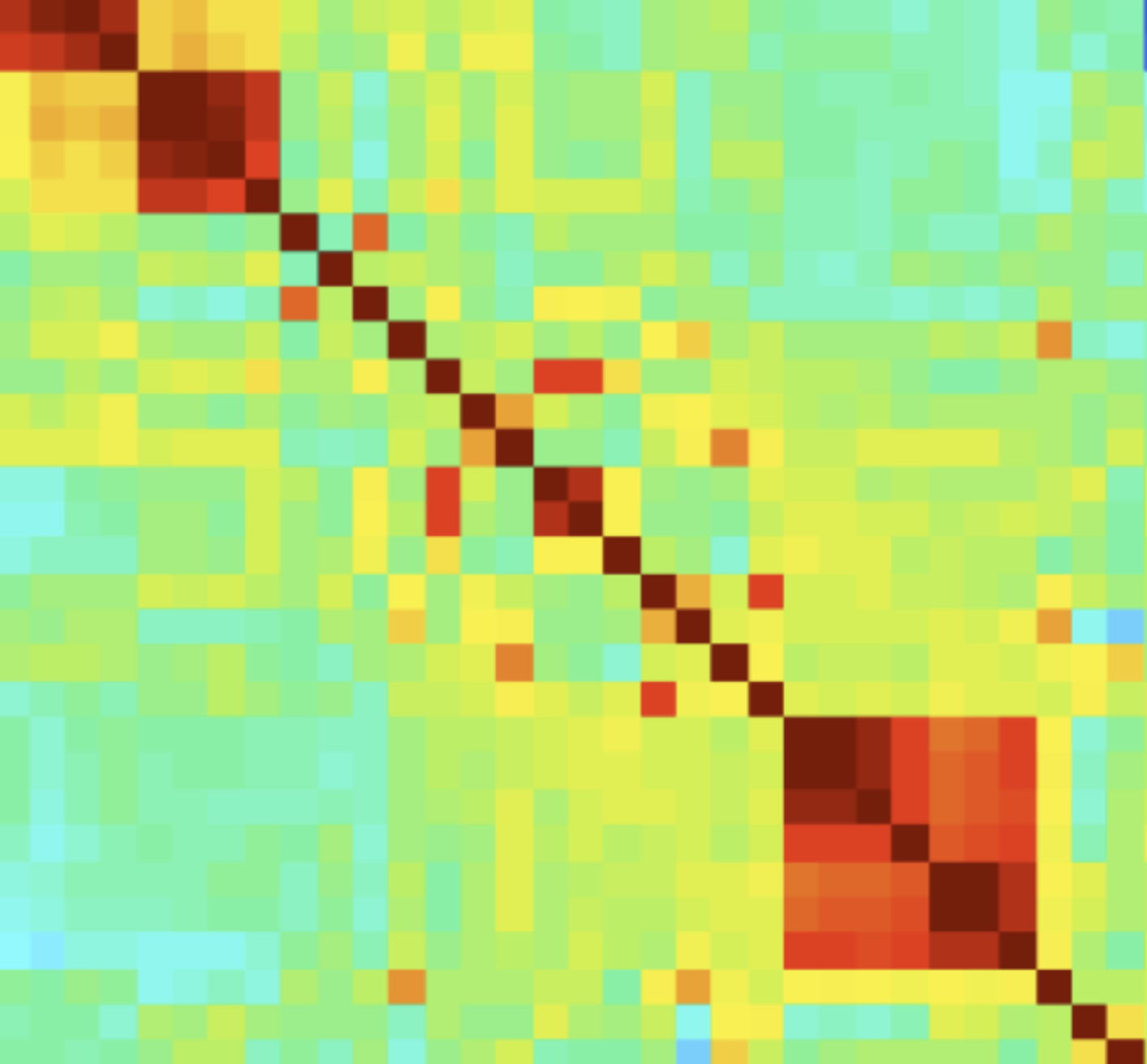

#create a single data frame with both features and target by concatonating boston_df=pd.concat([boston_features_df, boston_target_df], axis=1) boston_df.head() #Visualize corelations import seaborn as sns plt.figure(figsize=(12,10)) ax=sns.heatmap(boston_df.corr(), annot=True, cmap=sns.cubehelix_palette(20, light=0.95, dark=0.15)) ax.xaxis.tick_top plt.show()

#Correlation with dependent variable cor_MEDV = abs(cor["MEDV"]) #Set threshold at 0.6 - moderate-high correlation select_feat = cor_MEDV[cor_MEDV>0.6] select_feat

When the threshold is set at 0.6, only two variables are selected: LSTAT and RM. Their correlation coefficients are listed as well.

RM 0.695360 LSTAT 0.737663 MEDV 1.000000 Name: MEDV, dtype: float64

Feature Selection Using Shrinkage or Decision Trees:

Lasso (L1) Based Feature Selection:

Several models are designed to reduce the number of features. One of the shrinkage methods - Lasso - for example reduces several coefficients to zero leaving only features that are truly important. For a discussion on Lasso and L1 penalty, please click:

Sci-Kit offers SelectFromModel as a tool to run embedded models for feature selection. The module makes use of a threshold parameter, which can be either user specified or heuristically set based on median or mean. Below, the code uses Lasso (L1 penalty) to find features for inclusion. I set the threshold to 0.25, which results in six features being selected. The get the names of the selected variables, a mask (integer index) of the features selected must be used by calling get_support().

import matplotlib.pyplot as plt import numpy as np from sklearn.datasets import load_boston from sklearn.feature_selection import SelectFromModel from sklearn.linear_model import LassoCV # load data X = X_train y = Y_train # Use L1 penalty estimator = LassoCV(cv=5, normalize = True) # Set a minimum threshold of 0.25 sfm = SelectFromModel(estimator, threshold=0.25, prefit=False, norm_order=1, max_features=None) sfm.fit(X, y) feature_idx = sfm.get_support() feature_name = X.columns[feature_idx] feature_name # n_features = sfm.transform(X).shape[1] # n_features

Index(['CHAS', 'NOX', 'RM', 'DIS', 'RAD', 'PTRATIO', 'LSTAT'], dtype='object')

Tree-based Feature Selection:

Decision trees or other tree-based models contain a variable importance output that can be used to decide, which feature to select for inclusion. Features that are closer to the root of the tree are more important than those at end splits, which are not as relevant. I am working on a discussion about decision trees, so check back for them soon.